Data Preparation

After you are done finalizing your keywords (head terms and modifiers), it’s time to collect all the data required for the programmatic build process. In this section, you will learn about different sources and ways to collect data for your programmatic SEO project.

And if you have the data already, you can skip this section. So in this step, I will assume that you don’t already have the dataset.

The data collection process depends on the topics that you have finalized and the type of posts that you will be creating.

For example, if the topic you have finalized is best places to visit in {city} then you will need the following data points, at least:

- Name of the city

- Country/state the city is in

- List of all the interesting places

- Best time of the year to visit

- Distance from the nearest airport/railway station

- Estimated cost

- Good hotels in the city to stay in, etc.

And there will never be a single site or a single way that you can use to collect all the required data points for your project. Let’s get through each of them, in detail:

1. Use your country's government data

Governments of almost all the countries provide several datasets and APIs that you can use in your project. Generally, they are completely free to use, but make sure to read the terms and conditions at first.

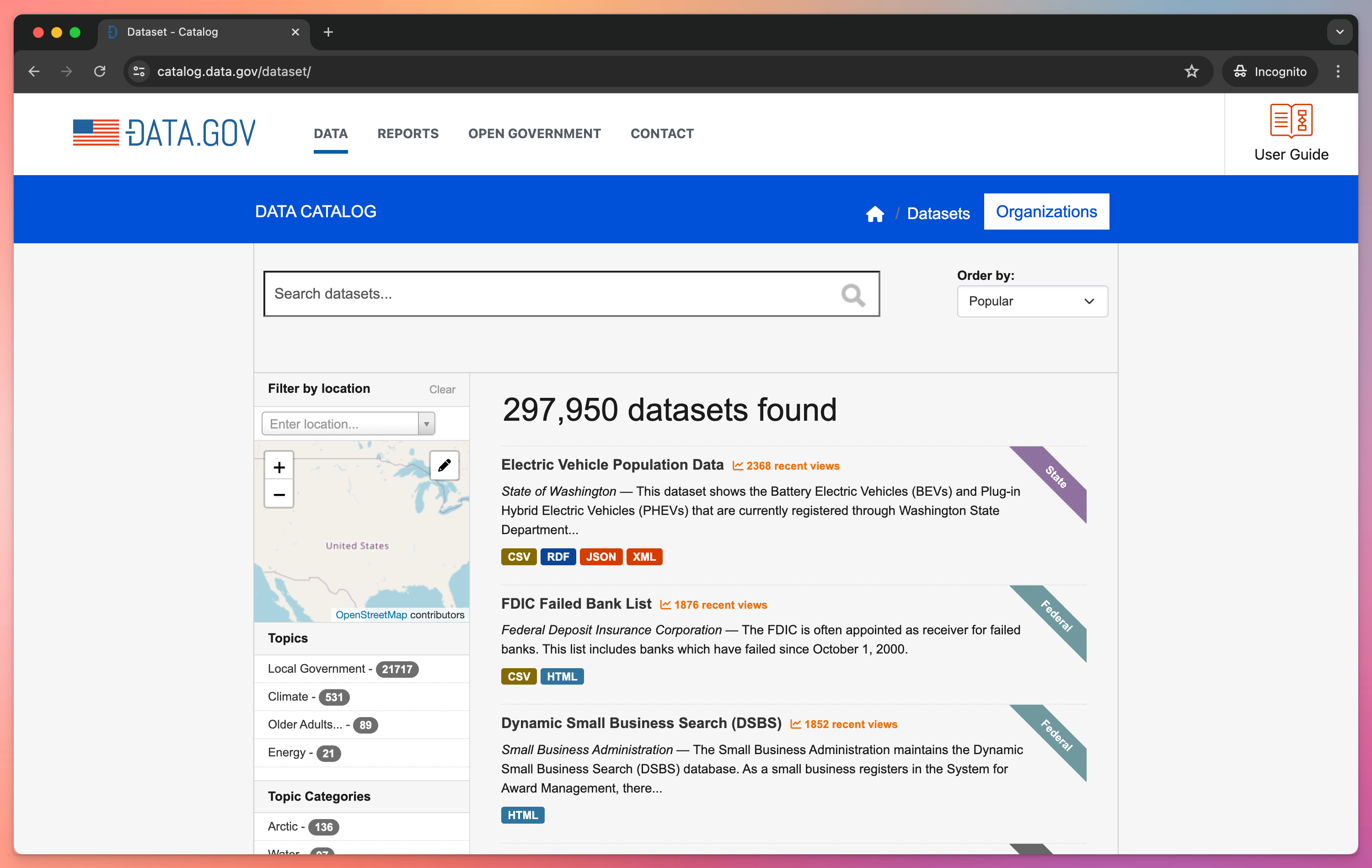

For example, the US government's data website https://data.gov/ has more than 300k different datasets related to agriculture, commerce, defense, energy, health, transportation, business, etc.

Another example is the Indian government’s site https://data.gov.in/ which offers thousands of datasets related to health, sports, biotechnology, travel, tourism, technology, etc. sectors that you can use. Most of the data is available in the simple CSV format, but there are 1000s of APIs available too.

2. Look for other public data

There are many other datasets related to various topics available publicly that you can access and use. Most of these public datasets can be found directly on Google and on other search engines by searching {topic} datasets.

Before using any public data, make sure you have proper rights to use the data in your projects.

a. Find datasets on GitHub

There are thousands of datasets available on GitHub that you can search for by using the following 2 methods:

Search directly on GitHub.com by using the terms that you’re searching the datasets for. For example, if I am looking for food nutrition related data, then I’d search “food nutrition data” in GitHub.

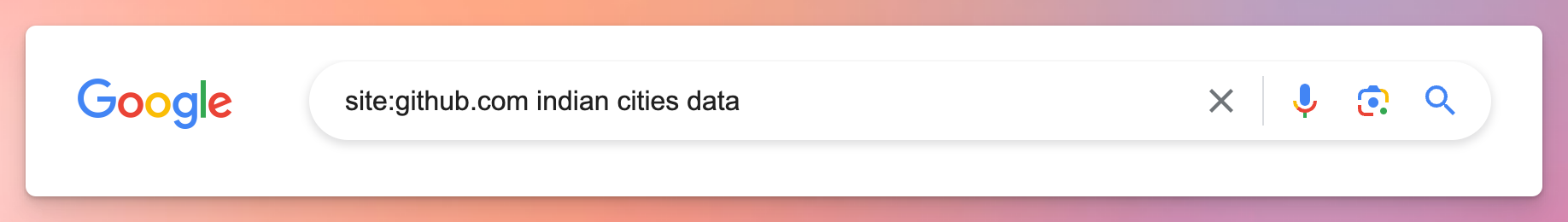

Use site: search operators on Google to find what you’re looking for on GitHub. For example, if I am looking for data related to Indian cities, then I’d search site:github.com indian cities data (as you see in the below screenshot) on Google.

To get started, here is a GitHub repository called Awesome Public Datasets that has a collection of 100s of datasets related to many categories. Most of the listed datasets are completely free to use.

b. Find public .xls files

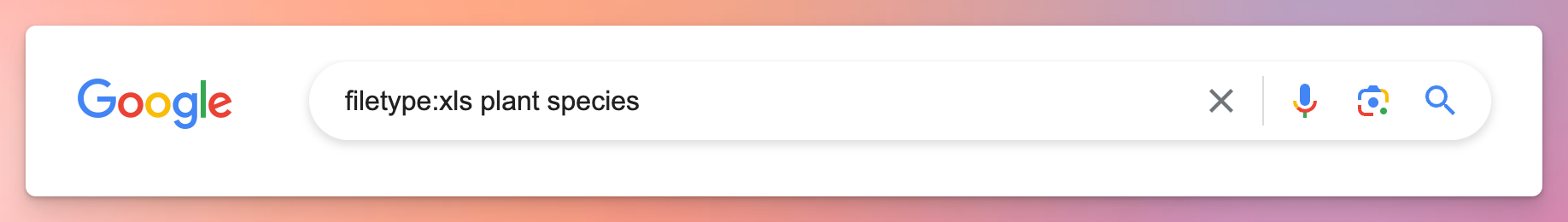

There are many Excel files indexed on Google that you can search for by using the filetype: search operator.

For example, if I am trying to find XLS files related to plant species, then I’d google filetype:xls plant species as you can see in the screenshot above. And searching this directly shows .xls files that you can download with just a click.

You can also find numerous PDF files by using the same method, but extracting data from PDF files is a bit complicated.

c. Find public Google Sheets spreadsheets

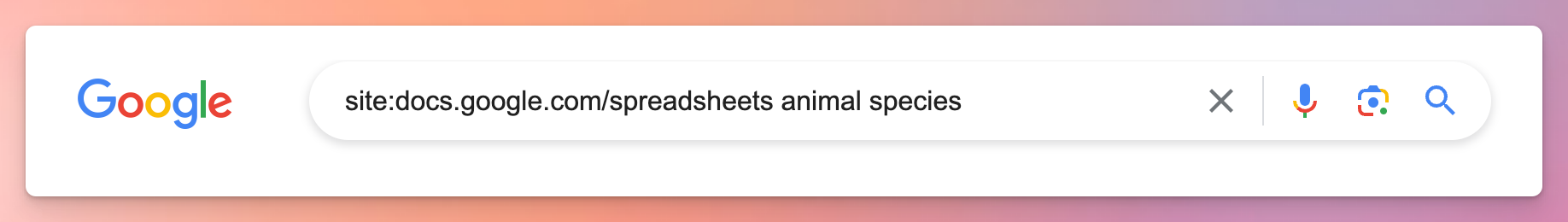

Just like the public .xls files, there are tons of Google Sheets documents that you can search for. You can use the site: operator for that.

For example, if you’re looking for data on animal species, then google the term site:docs.google.com/spreadsheets animal species, and a bunch of Google Sheets spreadsheets will appear.

d. Search through Reddit communities

Reddit has some active communities where you can request and share datasets related to various topics. The most popular Reddit community is r/datasets and some others are r/opendata, r/data and r/DataHoarder.

For example, I found a massive dataset of 107+ million journal articles of 4.7 TiB in size in one of these Reddit communities. And all that can be downloaded for free, as you see in the below screenshot.

e. Look for public APIs

Just like public datasets, you can also find public APIs that you can use in your programmatic SEO projects. One of the biggest API sites is RapidAPI where you will find 1000s of free as well as premium APIs.

For example, Alpha Vantage is a free API on RapidAPI that you can use to receive stock, forex, technical indicators, and cryptocurrency data.

f. Use Google Dataset Search tool

Google has a lesser known tool called Dataset Search, where you can search for the datasets that you’re looking for. Just type in your query in the search box, hit enter, and you will be presented with tons of useful data.

For example, I was looking for some data on COVID-19 and found an interesting one, for free.

3. Hire an experienced scraper

Data scraping is a complicated and time consuming process, so unless you’re an expert, hiring an experienced scraper to collect the data for your programmatic SEO project can be another good option.

The person you hire should be able to help you with scraping as well as cleaning the scraped data, so that you only focus on what matters the most. So make sure you communicate properly about your requirements beforehand.

On freelancing platforms like Upwork, you can hire web scraping experts for as low as $10 per hour.